Sentiment Analysis of Airbnb Reviews

Published:

The ubiquity of the multi side sharing economy platforms has gotten most of us accustomed to assigning a ‘star-rating’ post usage of a service. In the case of Uber it is a 5 star scale that both driver and passenger assign each other. In the case of Airbnb it is a 10 point scale for a variety of criterion that both hosts and renters assign each other.

Two things about these ratings jump out at me. First, we don’t put too much thought into assigning these ratings. Second, we almost always end up assigning a rating on the higher end of the scale that is available.

These ratings, which I guarantee we don’t think too much about, are in fact, an important metric that the governance of these these platforms are based on. For example, in the case of a hotel, the quality of the room is directly managed by the hotel management. However, in the case of an Airbnb the strongest indicator of the quality of the room or apartment is an average of this arbitrary rating that we assign post usage. The logic behind these ratings is that on an aggregate level by being revealing about the quality of service they serve as one of the tools to help build the all important ‘trust’ factor in the sharing economy.

To the credit of these sharing economy companies, they have been trying to improve this system and build better ways of capturing these ratings. Uber has us input the reason behind a specific rating, Airbnb has us rate the service on a variety of parameters. However, while these additional data points help build a richer picture of the ‘star-rating’, it still suffers from the fact that there is no good way to judge how to rate the level of service and most of us end up rating these services on the higher end of the scale that is available.

This could mean two things, first, people are very satisfied with the service or second, like discussed before people by lacking a good mental model of what these ratings mean are predisposed to rating the service on the higher end of the scale.

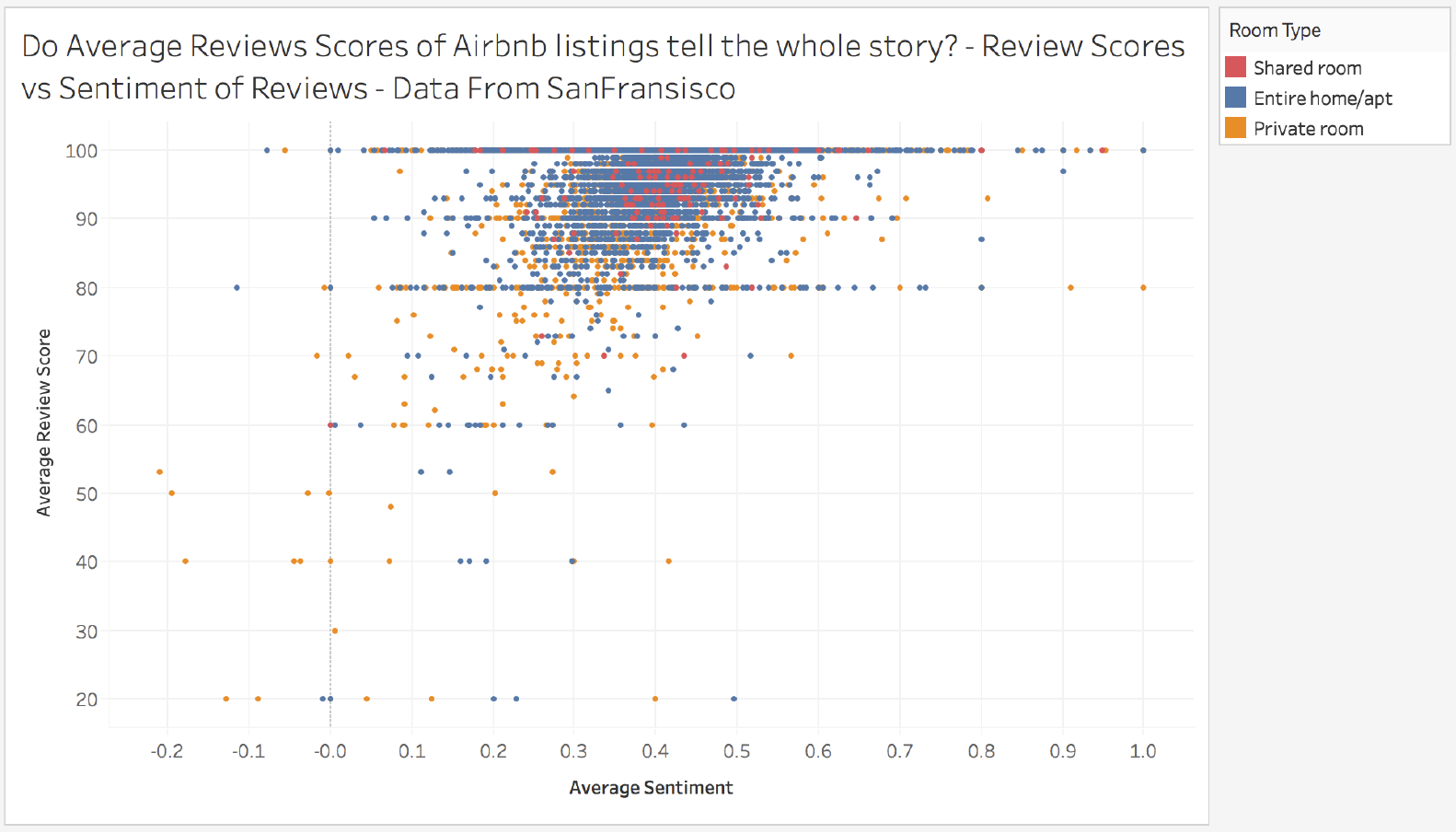

Airbnb fortunately collects another form of data on the users rating, a free text review that users can give to a property that they stayed at. This presents an interesting case for using NLP techniques to try to analyze the average sentiment of reviews for these properties and seeing how they map to the numeric rating that users assign. It will show if users are revealing things in free text reviews that they don’t know how to map to a numeric rating.

What is Sentiment Analysis?

The sentiment of a bunch of free text is a score assigned between -1 and 1, where -1 is most negative and 1 indicates the most positive a sentence can be. This is calculated by looking at each of the adjectives in the sentence individually and assigning a score based on the average of the polarity of each of the words.

This method brings up obvious questions about how context is handled, there are more advanced tools and methods used that handle context, the tool used for this analysis ignores context. This is a limitation of the sentiment that is calculated for these reviews. But nonetheless, a visualization using a scatter plot of the results are blow.

The first thing that is apparent is that the distribution of ratings of listings are clustered in the top of the visualization, confirming the assumption that I made earlier that we tend to give pretty high ratings. In addition to this, it is also apparent that the polarity of sentiment is clustered around 0.4, which is moderately positive even when ratings are very high. Interestingly, it can be seen for properties with a rating of 100 that the polarity is across the spectrum, again indicating that the ratings alone do not capture the full sentiment of an experience with Airbnb.